Wan 2.2 Animate is a unified AI character animation and character replacement model from Alibaba’s Tongyi Lab that has quickly become a favorite for creators working in real-time, controllable video generation. It can take a single character image plus a driving video and either animate the character to match the motion, or replace the subject in the original clip while preserving scene lighting and color for seamless results. This guide distills the most reliable, research-backed information available so you can evaluate, install, and start using Wan 2.2 Animate effectively for your workflows.

Primary sources used in this article: the official research/announcement materials and docs for Wan-Animate (arXiv technical report • project page • Hugging Face model card), the vendor blog (wan.video), and implementation guides (e.g., ComfyUI official docs • Comfy Org blog).

What Sets Wan 2.2 Animate Apart

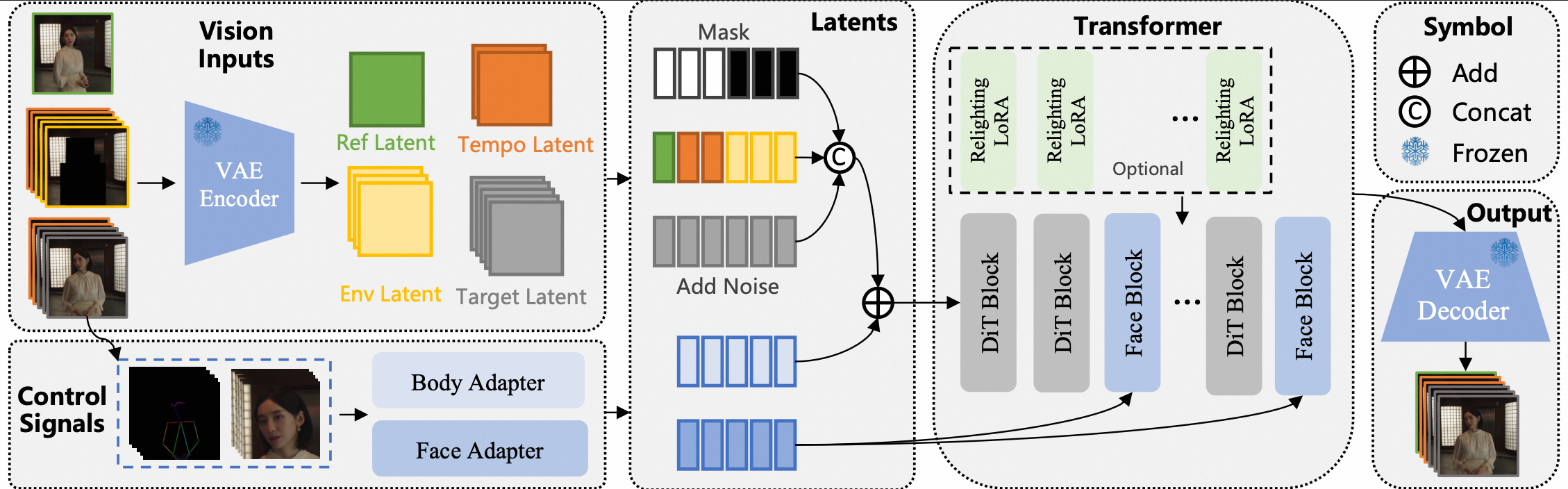

Wan-Animate (the “Animate” component of Wan 2.2) unifies two historically separate tasks—image-to-video character animation and video character replacement—under one model and input paradigm. According to the authors, the system decouples body motion and facial expression control: skeleton-aligned signals drive pose/gesture, while identity-preserving facial features extracted from the source image drive expressions. For replacement, an auxiliary Relighting LoRA helps match environment lighting and color tone so the inserted subject “belongs” in the scene.

Practically, that means Wan 2.2 Animate can produce controllable, realistic outputs with strong temporal consistency (including long sequences via segment chaining/temporal guidance), and offers a dependable path for both social-video creators and professional post-production teams.

Key Features of Wan 2.2 Animate

1) Dual Modes: Animation & Replacement

What it does: Animate a [stylized character portrait] to follow the motion in a [reference dance clip], or swap the main subject in a [talking-head interview] with your [digital character] while preserving the original lighting.

Why it’s notable: You get one model and one workflow for two high-value tasks—no need to maintain separate pipelines. This reduces operational overhead and makes experimentation faster in tools like ComfyUI.

2) Holistic Replication of Motion & Expression

Wan-Animate explicitly separates and injects control signals: skeleton-based representation for body motion; facial latents for expression reenactment. Expression signals are injected via cross-attention to preserve subtle details. Compared to prior open models focused mainly on pose transfer, Wan 2.2 Animate better captures facial expressiveness while keeping body motion faithful—key to believable character animation.

3) Seamless Environmental Integration via Relighting LoRA

In replacement mode, Wan 2.2 Animate employs an auxiliary Relighting LoRA that preserves your character’s appearance while adapting lighting and color to the target scene, minimizing the “sticker” look common in head/face swaps so the result feels native—crucial for ads, narrative edits, and continuity-sensitive shots; for example, you can replace a [studio-lit host] with your [brand mascot] while maintaining the scene’s key/fill/rim balance.

4) Long-Video Generation with Temporal Guidance

Wan-Animate supports segment chaining where subsequent segments condition on prior frames, improving temporal consistency for long clips. Many “pose transfer” models degrade with long outputs; Wan’s segment conditioning helps maintain identity, motion smoothness, and background coherence—useful for music videos, explainers, and creator workflows.

5) Open Access + Multiple Runtimes (Web, Space, Local, ComfyUI)

You can try Wan 2.2 Animate in a browser, run the official demo on Hugging Face Spaces, integrate natively in ComfyUI, or run locally from the model card guides.

Flexible access lowers the barrier to evaluation and production. Teams can prototype online, then move on-prem or to GPU servers without changing models.

6) Performance & Efficiency Options

The Wan 2.2 family introduces architecture and deployment optimizations (e.g., MoE, high-compression VAE). Community tooling like DiffSynth-Studio advertises FP8, sequence parallelism, and offloading for Wan 2.2 pipelines. As you scale to longer videos or high resolutions, these optimizations can reduce VRAM pressure and speed up inference on modern GPUs.

How to Install Wan 2.2 Animate

There are three practical routes: (A) zero-install in the browser, (B) native ComfyUI integration, and (C) local CLI. Choose based on your goals and resources.

A) Try It in the Browser (Fastest)

- Open an official demo.Use Hugging Face Space: Wan2.2-Animate. This lets you upload a character image and a driver clip and choose Move (animation) or Replace mode. Great for quick evaluations without any setup.

- Explore the project examples.The official project page showcases qualitative comparisons and use cases, helping you set expectations and choose good inputs.

- Read the research summary.The technical report explains the control signals, Relighting LoRA, and long-video strategy—essential context before deeper adoption.

B) Use Wan 2.2 Animate in ComfyUI (Recommended for Creators)

- Update ComfyUI to the latest version.The official ComfyUI docs maintain current workflow templates and list required models. Up-to-date ComfyUI ensures the Wan nodes and templates load correctly.

- Load an official workflow template.In ComfyUI, go to Workflow → Browse Templates → Video → Wan2.2. Load the “Wan2.2 …” template that matches your task (TI2V, I2V, T2V). For Animate-specific pipelines, consult the Comfy Org blog post announcing native support and linking example graphs.

- Download the required model files.The docs list diffusion checkpoints, VAE, and text encoders needed for your chosen graph. Place them in the correct folders (e.g., ComfyUI/models/diffusion_models, …/vae, …/text_encoders), then relaunch ComfyUI to index them.

- Bind your inputs and run.Attach your [character image] and [driver video], tweak frame length/size, and hit Run. Iterate on denoise strength, guidance, or relighting nodes to balance identity fidelity with environmental match. For troubleshooting and optimization notes, see the ComfyUI post and comments.

C) Install Locally via CLI (For Advanced Users/Servers)

The Hugging Face model card provides authoritative, up-to-date commands for local installation and inference.

- Clone the repo.

git clone https://github.com/Wan-Video/Wan2.2.git && cd Wan2.2

Rationale: Use the official repository so your scripts and paths match documentation. - Install dependencies (ensure Torch ≥ 2.4.0).

pip install -r requirements.txt

Rationale: Correct dependency versions (e.g., FlashAttention, DiT components) are crucial for stable, performant inference. The card notes installingflash_attnlast if it fails initially. - (Optional) Add Speech-to-Video requirements.

pip install -r requirements_s2v.txt

Rationale: Only needed if you plan to try Wan 2.2 S2V. Keeping these optional extras separate avoids cluttering minimal installs. - Download the Animate model checkpoints.Via Hugging Face CLI:

pip install "huggingface_hub[cli]"

huggingface-cli download Wan-AI/Wan2.2-Animate-14B --local-dir ./Wan2.2-Animate-14B

Rationale: Pull verified weights from an official source. ModelScope is also supported. - Preprocess inputs.Animation mode:

python ./wan/modules/animate/preprocess/preprocess_data.py --ckpt_path ./Wan2.2-Animate-14B/process_checkpoint --video_path ./examples/wan_animate/animate/video.mp4 --refer_path ./examples/wan_animate/animate/image.jpeg --save_path ./examples/wan_animate/animate/process_results --resolution_area 1280 720 --retarget_flag --use_flux

Replacement mode:

python ./wan/modules/animate/preprocess/preprocess_data.py --ckpt_path ./Wan2.2-Animate-14B/process_checkpoint --video_path ./examples/wan_animate/replace/video.mp4 --refer_path ./examples/wan_animate/replace/image.jpeg --save_path ./examples/wan_animate/replace/process_results --resolution_area 1280 720 --iterations 3 --k 7 --w_len 1 --h_len 1 --replace_flag

Rationale: The scripts extract pose and facial features into the required intermediate “materials” before inference. - Run inference.Animation:

python generate.py --task animate-14B --ckpt_dir ./Wan2.2-Animate-14B/ --src_root_path ./examples/wan_animate/animate/process_results/ --refert_num 1

Replacement:

python generate.py --task animate-14B --ckpt_dir ./Wan2.2-Animate-14B/ --src_root_path ./examples/wan_animate/replace/process_results/ --refert_num 1 --replace_flag --use_relighting_lora

Rationale: Officially recommended flags for running in single-GPU mode; multi-GPU variants with FSDP + Ulysses are also documented.

Getting Started with Wan 2.2 Animate

Once installed—or with a ComfyUI workflow ready—use these patterns to get reliable results quickly.

Pick the Right Inputs

- Character image: Use a clean, mid-shot or full-body image with good lighting and minimal motion blur. Identity fidelity and cloth/hair detail transfer benefit from higher-quality inputs.

- Driver video: Prefer steady camera, clear subject, and motion that matches your end goal. Avoid extreme occlusions in early trials.

- Aspect ratio & framing: Keep framing consistent between the portrait and the driver to reduce distortions and unintended crops.

Choose the Right Mode

- Animation mode if you want to keep the source image’s background and only animate the character.

- Replacement mode if you need to insert the source identity into the driver’s scene and keep the driver’s environment. Enable relighting to better match the shot.

Recommended Flags & Options (CLI)

--retarget_flag(animation) helps adapt skeleton motion across different body types/poses;--use_fluxselects the appropriate conditioning path in preprocessing.--use_relighting_lora(replacement) is recommended for environmental consistency (lighting/tone).- For longer outputs, consider segment generation and “temporal guidance” workflows (ComfyUI or custom). This improves cross-segment continuity.

Example Workflow (CLI)

Objective: Animate a [stylized character portrait] using the motion from a [reference dance clip] and render ~[12s] at [720p].

- Preprocess: Run the animation preprocessing command (above) on your inputs with

--resolution_area 1280 720and--retarget_flag. - Generate: Use

generate.py --task animate-14Bpointing to yourprocess_resultsfolder. - Check Identity: If the face drifts, try a cleaner portrait, tighten cropping, or reduce denoise strength (ComfyUI node settings / CLI flags).

- Export & Post: Export to [ProRes/H.264] and apply color management. If tones drift across time, test a color-matching pass or stabilize exposure.

Example Workflow (ComfyUI)

Objective: Replace the subject in a [talking-head interview] with a [brand character] and preserve studio lighting.

- Load Template: Use a Wan 2.2 Animate template (see Comfy Org blog).

- Bind Inputs: Connect the [brand character image] and [interview clip]. Ensure the mask/subject segmentation node isolates the person cleanly.

- Relight: Turn on or add the Relighting LoRA node/path if available in your graph. Aim for a soft match to key/fill ratios.

- Run & Iterate: If skin tones drift, try a color-preserving node or post color correction. If identity wobbles, reduce motion strength or test a higher-resolution source image.

Tips, Best Practices, and Common Pitfalls

- Mind identity vs. motion trade-offs. Extremely complex motion or occlusion can challenge identity stability. Favor strong inputs first, then push edge cases.

- Use relighting for replacements. The Relighting LoRA exists to improve environmental integration; enable or emulate this in your graph to reduce “cutout” artifacts.

- Color shifts in some community workflows. Users have reported gradual color changes with specific ComfyUI wrapper graphs; switching templates or adding color-management nodes can help (ComfyUI issue discussion).

- Long videos: segment wisely. Chain segments and feed the last frames forward to maintain continuity (report).

- Ethics & consent. If you’re replacing or animating real people, ensure you have rights and consent—especially for commercial or public projects.

Performance Notes & Hardware

Wan 2.2’s broader ecosystem includes optimized deployment options, and the Animate model card documents single- and multi-GPU commands (FSDP + Ulysses) plus efficiency notes by GPU family. For smaller VRAM budgets, community workflows adapt Animate for lower-memory cards; as always, verify provenance and test quality before production.

Conclusion

Wan 2.2 Animate pushes open character animation forward with a pragmatic, unified design: one model for both animation and replacement, precise motion/expression control, and environment-aware relighting. Whether you’re a solo creator prototyping in a browser or a studio building production pipelines in ComfyUI/CLI, the model’s combination of control, quality, and accessibility makes it a compelling choice in the AI animation tool space. Start with the official Space to validate your concept, then adopt a ComfyUI or CLI workflow for repeatable, scalable results.

Test your first shot in the Wan 2.2 Animate Space, then level up with ComfyUI’s templates or the official CLI. For broader AI-art news and playbooks, explore more guides at AIArtiMind.